Introduction to Apache Airflow

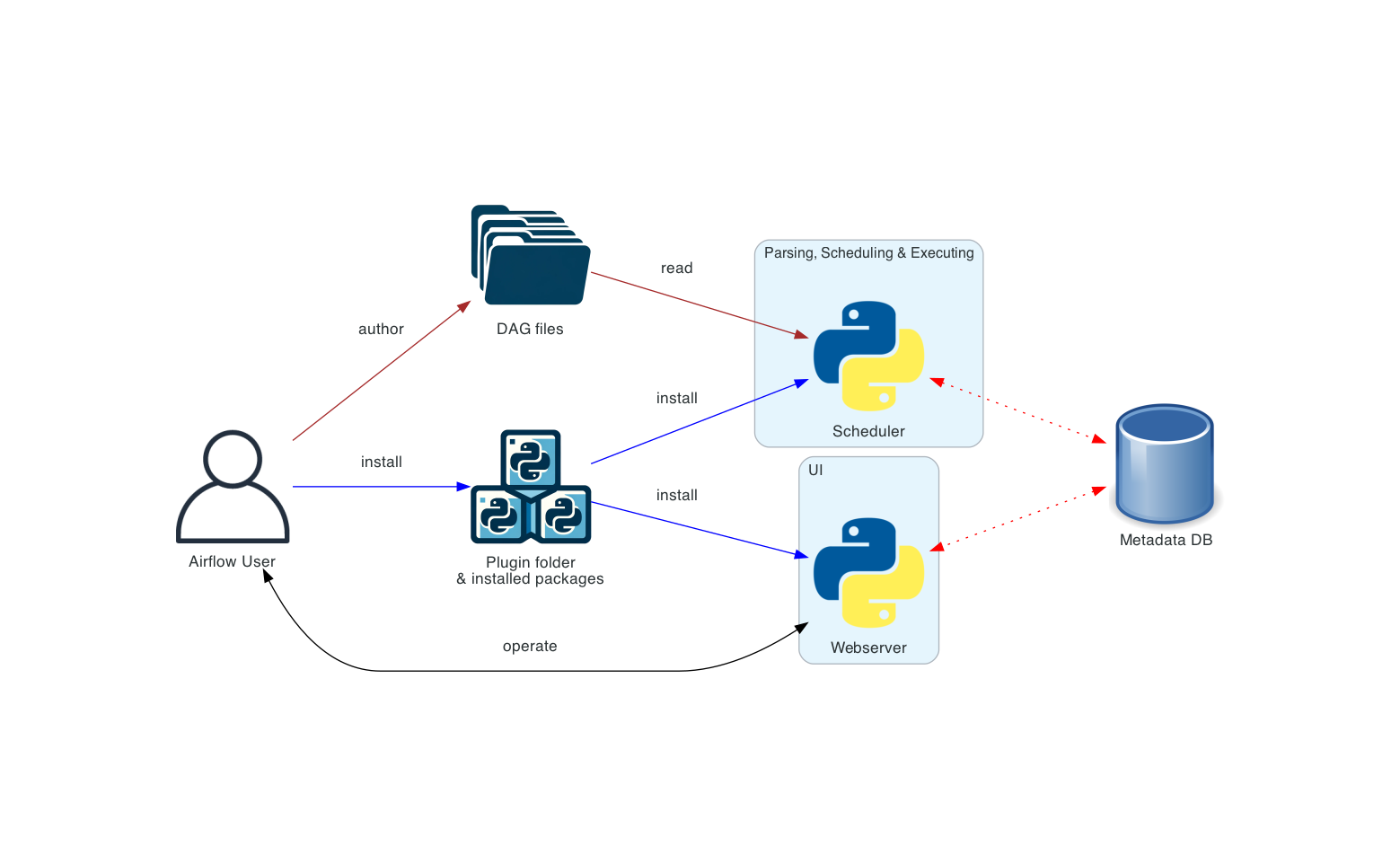

Apache Airflow is an open-source platform designed to help users author, schedule, and monitor complex workflows or “data pipelines.” Developed by Airbnb and now an Apache Software Foundation project, Airflow is widely used in data engineering and ETL (Extract, Transform, Load) processes to automate and visualize workflows, making it easier for engineers to manage data operations at scale.

Airflow GitHub Repository: Apache Airflow GitHub

Key Features of Apache Airflow

Airflow is robust and highly customizable, with features that make it suitable for handling complex, data-driven workflows across various domains.

- Directed Acyclic Graphs (DAGs): Workflows in Airflow are created as DAGs, where each task is a node in a graph, and dependencies between tasks are edges. DAGs allow you to set the order and dependencies for each task, ensuring smooth execution.

- Python-Based: Airflow is fully developed in Python, making it highly accessible and customizable for Python developers. DAGs are written in Python code, allowing complete flexibility and the use of custom Python functions and libraries.

- Built-in Scheduler: Airflow has a built-in scheduler that can handle periodic tasks. This scheduler runs tasks at specified intervals or based on trigger-based events.

- Web Interface: Airflow includes a rich web-based user interface for monitoring and managing workflows. You can view the state of tasks, troubleshoot failures, trigger re-runs, and explore task logs from this UI.

- Task-Level Retry Logic: You can set retries, timeout policies, and alert mechanisms to ensure workflow resilience.

- Supports Multiple Executors: For local and distributed setups, Airflow offers several executors (like LocalExecutor, CeleryExecutor, and KubernetesExecutor) that allow it to be scalable.

Getting Started with Apache Airflow

To get started, Airflow can be installed on your local machine or on a cloud-based server using various installation methods such as pip, Docker, or Helm for Kubernetes clusters.

Prerequisites

- Python 3.7 or higher

- Virtual environment (recommended)

- Basic knowledge of Python and task automation

Installation Guide

You can install Airflow easily with pip by following these steps:

# Create and activate a virtual environment

python3 -m venv airflow_venv

source airflow_venv/bin/activate

# Set the Airflow home directory

export AIRFLOW_HOME=~/airflow

# Install Airflow via pip

pip install apache-airflowFor more details, see the official Airflow Installation Guide.

Creating a Basic DAG

To create a workflow in Airflow, define a DAG in Python. Here’s an example of a simple DAG with two tasks that execute sequentially.

from airflow import DAG

from airflow.operators.dummy import DummyOperator

from datetime import datetime

# Define the DAG arguments

default_args = {

'owner': 'airflow',

'start_date': datetime(2023, 1, 1),

'retries': 1,

}

# Define the DAG

dag = DAG(

'example_dag',

default_args=default_args,

schedule_interval='@daily',

)

# Define tasks

start = DummyOperator(task_id='start', dag=dag)

end = DummyOperator(task_id='end', dag=dag)

# Set task dependencies

start >> endThis DAG schedules a task to run daily and performs two operations: start and end, where start runs first, followed by end.

Running Airflow Locally

Once you have your DAG defined, start the Airflow services.

# Initialize the database

airflow db init

# Start the web server

airflow webserver --port 8080

# Start the scheduler

airflow schedulerAfter the web server starts, go to http://localhost:8080 to access the Airflow dashboard. From there, you can enable your DAG and view task statuses.

Use Cases for Apache Airflow

Airflow is widely used in industries that rely on extensive data pipelines, including:

- Data Engineering: ETL workflows for data ingestion, transformation, and loading.

- Machine Learning: Automating ML model training, retraining, and deployment workflows.

- DevOps: Managing infrastructure workflows for provisioning and monitoring.

Advantages of Using Apache Airflow

- Flexible: Highly customizable and easy to adapt for complex use cases.

- Extensible: Integrates with popular cloud providers, databases, and big data tools.

- Resilient: Built-in retry mechanisms and error handling.

- Scalable: Different executors support local and distributed setups.

Further Resources

- Official Documentation: For comprehensive information on all features and APIs, refer to the Airflow Documentation.

- Airflow Tutorial: A great starting point to create your first DAG is available in the Airflow Quick Start Tutorial.

- Community: Apache Airflow has an active community, and the Apache Airflow GitHub Discussions is a good place for support and interaction.

Conclusion

Apache Airflow is a flexible and powerful workflow orchestration tool that simplifies managing data-driven processes, making it a valuable tool for engineers and developers. With its extensible features, customizable configurations, and robust community, it continues to be a leading choice for data and task automation.

Whether you’re automating ETL pipelines, monitoring data transformations, or managing ML workflows, Airflow provides the tools needed to simplify and visualize complex workflows.